Tutorial Objectives#

Estimated timing of tutorial: 1 hour, 30 minutes

This is the first of two core tutorials on Bayesian statistics. In these tutorials, we will explore the fundamental concepts of the Bayesian approach. In this tutorial you will work through an example of Bayesian inference and decision making using a binary hidden state. The second tutorial extends these concepts to a continuous hidden state. In the related NMA days, each of these basic ideas will be extended. In Hidden Dynamics, we consider these ideas through time as you explore what happens when we infer a hidden state using repeated observations and when the hidden state changes across time. On the Optimal Control day, we will introduce the notion of how to use inference and decisions to select actions for optimal control.

This notebook will introduce the fundamental building blocks for Bayesian statistics:

How do we combine the possible loss (or gain) for making a decision with our probabilistic knowledge?

How do we use probability distributions to represent hidden states?

How does marginalization work and how can we use it?

How do we combine new information with our prior knowledge?

Setup#

Install and import feedback gadget#

Show code cell source

# @title Install and import feedback gadget

!pip3 install vibecheck datatops --quiet

from vibecheck import DatatopsContentReviewContainer

def content_review(notebook_section: str):

return DatatopsContentReviewContainer(

"", # No text prompt

notebook_section,

{

"url": "https://pmyvdlilci.execute-api.us-east-1.amazonaws.com/klab",

"name": "neuromatch_cn",

"user_key": "y1x3mpx5",

},

).render()

feedback_prefix = "W3D1_T1"

# Imports

import numpy as np

import matplotlib.pyplot as plt

from matplotlib import patches, transforms, gridspec

from scipy.optimize import fsolve

from collections import namedtuple

Figure Settings#

Show code cell source

# @title Figure Settings

import logging

logging.getLogger('matplotlib.font_manager').disabled = True

import ipywidgets as widgets # interactive display

from ipywidgets import GridspecLayout, HBox, VBox, FloatSlider, Layout, ToggleButtons

from ipywidgets import interactive, interactive_output, Checkbox, Select

from IPython.display import clear_output

%config InlineBackend.figure_format = 'retina'

plt.style.use("https://raw.githubusercontent.com/NeuromatchAcademy/course-content/main/nma.mplstyle")

import warnings

warnings.filterwarnings("ignore")

Plotting Functions#

Show code cell source

# @title Plotting Functions

from matplotlib import colors

def plot_joint_probs(P, ):

assert np.all(P >= 0), "probabilities should be >= 0"

# normalize if not

P = P / np.sum(P)

marginal_y = np.sum(P,axis=1)

marginal_x = np.sum(P,axis=0)

# definitions for the axes

left, width = 0.1, 0.65

bottom, height = 0.1, 0.65

spacing = 0.005

# start with a square Figure

fig = plt.figure(figsize=(5, 5))

joint_prob = [left, bottom, width, height]

rect_histx = [left, bottom + height + spacing, width, 0.2]

rect_histy = [left + width + spacing, bottom, 0.2, height]

rect_x_cmap = plt.cm.Blues

rect_y_cmap = plt.cm.Reds

# Show joint probs and marginals

ax = fig.add_axes(joint_prob)

ax_x = fig.add_axes(rect_histx, sharex=ax)

ax_y = fig.add_axes(rect_histy, sharey=ax)

# Show joint probs and marginals

ax.matshow(P,vmin=0., vmax=1., cmap='Greys')

ax_x.bar(0, marginal_x[0], facecolor=rect_x_cmap(marginal_x[0]))

ax_x.bar(1, marginal_x[1], facecolor=rect_x_cmap(marginal_x[1]))

ax_y.barh(0, marginal_y[0], facecolor=rect_y_cmap(marginal_y[0]))

ax_y.barh(1, marginal_y[1], facecolor=rect_y_cmap(marginal_y[1]))

# set limits

ax_x.set_ylim([0, 1])

ax_y.set_xlim([0, 1])

# show values

ind = np.arange(2)

x,y = np.meshgrid(ind,ind)

for i,j in zip(x.flatten(), y.flatten()):

c = f"{P[i, j]:.2f}"

ax.text(j,i, c, va='center', ha='center', color='black')

for i in ind:

v = marginal_x[i]

c = f"{v:.2f}"

ax_x.text(i, v +0.1, c, va='center', ha='center', color='black')

v = marginal_y[i]

c = f"{v:.2f}"

ax_y.text(v+0.2, i, c, va='center', ha='center', color='black')

# set up labels

ax.xaxis.tick_bottom()

ax.yaxis.tick_left()

ax.set_xticks([0,1])

ax.set_yticks([0,1])

ax.set_xticklabels(['Silver', 'Gold'])

ax.set_yticklabels(['Small', 'Large'])

ax.set_xlabel('color')

ax.set_ylabel('size')

ax_x.axis('off')

ax_y.axis('off')

return fig

def plot_prior_likelihood_posterior(prior, likelihood, posterior):

# definitions for the axes

left, width = 0.05, 0.3

bottom, height = 0.05, 0.9

padding = 0.12

small_width = 0.1

left_space = left + small_width + padding

added_space = padding + width

fig = plt.figure(figsize=(12, 4))

rect_prior = [left, bottom, small_width, height]

rect_likelihood = [left_space , bottom , width, height]

rect_posterior = [left_space + added_space, bottom , width, height]

ax_prior = fig.add_axes(rect_prior)

ax_likelihood = fig.add_axes(rect_likelihood, sharey=ax_prior)

ax_posterior = fig.add_axes(rect_posterior, sharey = ax_prior)

rect_colormap = plt.cm.Blues

# Show posterior probs and marginals

ax_prior.barh(0, prior[0], facecolor=rect_colormap(prior[0, 0]))

ax_prior.barh(1, prior[1], facecolor=rect_colormap(prior[1, 0]))

ax_likelihood.matshow(likelihood, vmin=0., vmax=1., cmap='Reds')

ax_posterior.matshow(posterior, vmin=0., vmax=1., cmap='Greens')

# Probabilities plot details

ax_prior.set(xlim=[1, 0], xticks=[], yticks=[0, 1],

yticklabels=['left', 'right'], title="Prior p(s)")

ax_prior.yaxis.tick_right()

ax_prior.spines['left'].set_visible(False)

ax_prior.spines['bottom'].set_visible(False)

# Likelihood plot details

ax_likelihood.set(xticks=[0, 1], xticklabels=['fish', 'no fish'],

yticks=[0, 1], yticklabels=['left', 'right'],

ylabel='state (s)', xlabel='measurement (m)',

title='Likelihood p(m (left) | s)')

ax_likelihood.xaxis.set_ticks_position('bottom')

ax_likelihood.spines['left'].set_visible(False)

ax_likelihood.spines['bottom'].set_visible(False)

# Posterior plot details

ax_posterior.set(xticks=[0, 1], xticklabels=['fish', 'no fish'],

yticks=[0, 1], yticklabels=['left', 'right'],

ylabel='state (s)', xlabel='measurement (m)',

title='Posterior p(s | m)')

ax_posterior.xaxis.set_ticks_position('bottom')

ax_posterior.spines['left'].set_visible(False)

ax_posterior.spines['bottom'].set_visible(False)

# show values

ind = np.arange(2)

x,y = np.meshgrid(ind,ind)

for i,j in zip(x.flatten(), y.flatten()):

c = f"{posterior[i, j]:.2f}"

ax_posterior.text(j, i, c, va='center', ha='center', color='black')

for i,j in zip(x.flatten(), y.flatten()):

c = f"{likelihood[i, j]:.2f}"

ax_likelihood.text(j, i, c, va='center', ha='center', color='black')

for i in ind:

v = prior[i, 0]

c = f"{v:.2f}"

ax_prior.text(v+0.2, i, c, va='center', ha='center', color='black')

def plot_prior_likelihood(ps, p_a_s1, p_a_s0, measurement):

likelihood = np.asarray([[p_a_s1, 1-p_a_s1], [p_a_s0, 1-p_a_s0]])

assert 0.0 <= ps <= 1.0

prior = np.asarray([ps, 1 - ps])

if measurement == "Fish":

posterior = likelihood[:, 0] * prior

else:

posterior = (likelihood[:, 1] * prior).reshape(-1)

posterior /= np.sum(posterior)

# definitions for the axes

left, width = 0.05, 0.3

bottom, height = 0.05, 0.9

padding = 0.12

small_width = 0.2

left_space = left + small_width + padding

small_padding = 0.05

fig = plt.figure(figsize=(12, 4))

rect_prior = [left, bottom, small_width, height]

rect_likelihood = [left_space , bottom , width, height]

rect_posterior = [left_space + width + small_padding, bottom , small_width, height]

ax_prior = fig.add_axes(rect_prior)

ax_likelihood = fig.add_axes(rect_likelihood, sharey=ax_prior)

ax_posterior = fig.add_axes(rect_posterior, sharey=ax_prior)

prior_colormap = plt.cm.Blues

posterior_colormap = plt.cm.Greens

# Show posterior probs and marginals

ax_prior.barh(0, prior[0], facecolor=prior_colormap(prior[0]))

ax_prior.barh(1, prior[1], facecolor=prior_colormap(prior[1]))

ax_likelihood.matshow(likelihood, vmin=0., vmax=1., cmap='Reds')

ax_posterior.barh(0, posterior[0], facecolor=posterior_colormap(posterior[0]))

ax_posterior.barh(1, posterior[1], facecolor=posterior_colormap(posterior[1]))

# Probabilities plot details

ax_prior.set(xlim=[1, 0], yticks=[0, 1], yticklabels=['left', 'right'],

title="Prior p(s)", xticks=[])

ax_prior.yaxis.tick_right()

ax_prior.spines['left'].set_visible(False)

ax_prior.spines['bottom'].set_visible(False)

# Likelihood plot details

ax_likelihood.set(xticks=[0, 1], xticklabels=['fish', 'no fish'],

yticks=[0, 1], yticklabels=['left', 'right'],

ylabel='state (s)', xlabel='measurement (m)',

title='Likelihood p(m | s)')

ax_likelihood.xaxis.set_ticks_position('bottom')

ax_likelihood.spines['left'].set_visible(False)

ax_likelihood.spines['bottom'].set_visible(False)

# Posterior plot details

ax_posterior.set(xlim=[0, 1], xticks=[], yticks=[0, 1],

yticklabels=['left', 'right'],

title="Posterior p(s | m)")

ax_posterior.spines['left'].set_visible(False)

ax_posterior.spines['bottom'].set_visible(False)

# show values

ind = np.arange(2)

x,y = np.meshgrid(ind, ind)

for i in ind:

v = posterior[i]

c = f"{v:.2f}"

ax_posterior.text(v+0.2, i, c, va='center', ha='center', color='black')

for i,j in zip(x.flatten(), y.flatten()):

c = f"{likelihood[i, j]:.2f}"

ax_likelihood.text(j, i, c, va='center', ha='center', color='black')

for i in ind:

v = prior[i]

c = f"{v:.2f}"

ax_prior.text(v+0.2, i, c, va='center', ha='center', color='black')

plt.show()

return fig

def plot_utility(ps):

prior = np.asarray([ps, 1 - ps])

utility = np.array([[2, -3], [-2, 1]])

expected = prior @ utility

# definitions for the axes

left, width = 0.05, 0.16

bottom, height = 0.05, 0.9

padding = 0.02

small_width = 0.1

left_space = left + small_width + padding

added_space = padding + width

fig = plt.figure(figsize=(17, 3))

rect_prior = [left, bottom, small_width, height]

rect_utility = [left + added_space , bottom , width, height]

rect_expected = [left + 2* added_space, bottom , width, height]

ax_prior = fig.add_axes(rect_prior)

ax_utility = fig.add_axes(rect_utility, sharey=ax_prior)

ax_expected = fig.add_axes(rect_expected)

rect_colormap = plt.cm.Blues

# Data of plots

ax_prior.barh(0, prior[0], facecolor=rect_colormap(prior[0]))

ax_prior.barh(1, prior[1], facecolor=rect_colormap(prior[1]))

ax_utility.matshow(utility, cmap='cool')

norm = colors.Normalize(vmin=-3, vmax=3)

ax_expected.bar(0, expected[0], facecolor=rect_colormap(norm(expected[0])))

ax_expected.bar(1, expected[1], facecolor=rect_colormap(norm(expected[1])))

# Probabilities plot details

ax_prior.set(xlim=[1, 0], xticks=[], yticks=[0, 1],

yticklabels=['left', 'right'], title="Probability of state")

ax_prior.yaxis.tick_right()

ax_prior.spines['left'].set_visible(False)

ax_prior.spines['bottom'].set_visible(False)

# Utility plot details

ax_utility.set(xticks=[0, 1], xticklabels=['left', 'right'],

yticks=[0, 1], yticklabels=['left', 'right'],

ylabel='state (s)', xlabel='action (a)',

title='Utility')

ax_utility.xaxis.set_ticks_position('bottom')

ax_utility.spines['left'].set_visible(False)

ax_utility.spines['bottom'].set_visible(False)

# Expected utility plot details

ax_expected.set(title='Expected utility', ylim=[-3, 3],

xticks=[0, 1], xticklabels=['left', 'right'],

xlabel='action (a)', yticks=[])

ax_expected.xaxis.set_ticks_position('bottom')

ax_expected.spines['left'].set_visible(False)

ax_expected.spines['bottom'].set_visible(False)

# show values

ind = np.arange(2)

x,y = np.meshgrid(ind,ind)

for i,j in zip(x.flatten(), y.flatten()):

c = f"{utility[i, j]:.2f}"

ax_utility.text(j, i, c, va='center', ha='center', color='black')

for i in ind:

v = prior[i]

c = f"{v:.2f}"

ax_prior.text(v+0.2, i, c, va='center', ha='center', color='black')

for i in ind:

v = expected[i]

c = f"{v:.2f}"

ax_expected.text(i, 2.5, c, va='center', ha='center', color='black')

return fig

def plot_prior_likelihood_utility(ps, p_a_s1, p_a_s0, measurement):

assert 0.0 <= ps <= 1.0

assert 0.0 <= p_a_s1 <= 1.0

assert 0.0 <= p_a_s0 <= 1.0

prior = np.asarray([ps, 1 - ps])

likelihood = np.asarray([[p_a_s1, 1-p_a_s1],[p_a_s0, 1-p_a_s0]])

utility = np.array([[2.0, -3.0], [-2.0, 1.0]])

if measurement == "Fish":

posterior = likelihood[:, 0] * prior

else:

posterior = (likelihood[:, 1] * prior).reshape(-1)

posterior /= np.sum(posterior)

expected = posterior @ utility

# definitions for the axes

left, width = 0.05, 0.3

bottom, height = 0.05, 0.3

padding = 0.12

small_width = 0.2

left_space = left + small_width + padding

small_padding = 0.05

fig = plt.figure(figsize=(10, 9))

rect_prior = [left, bottom + height + padding, small_width, height]

rect_likelihood = [left_space , bottom + height + padding , width, height]

rect_posterior = [left_space + width + small_padding,

bottom + height + padding,

small_width, height]

rect_utility = [padding, bottom, width, height]

rect_expected = [padding + width + padding + left, bottom, width, height]

ax_likelihood = fig.add_axes(rect_likelihood)

ax_prior = fig.add_axes(rect_prior, sharey=ax_likelihood)

ax_posterior = fig.add_axes(rect_posterior, sharey=ax_likelihood)

ax_utility = fig.add_axes(rect_utility)

ax_expected = fig.add_axes(rect_expected)

prior_colormap = plt.cm.Blues

posterior_colormap = plt.cm.Greens

expected_colormap = plt.cm.Wistia

# Show posterior probs and marginals

ax_prior.barh(0, prior[0], facecolor=prior_colormap(prior[0]))

ax_prior.barh(1, prior[1], facecolor=prior_colormap(prior[1]))

ax_likelihood.matshow(likelihood, vmin=0., vmax=1., cmap='Reds')

ax_posterior.barh(0, posterior[0], facecolor=posterior_colormap(posterior[0]))

ax_posterior.barh(1, posterior[1], facecolor=posterior_colormap(posterior[1]))

ax_utility.matshow(utility, vmin=0., vmax=1., cmap='cool')

ax_expected.bar(0, expected[0], facecolor=expected_colormap(expected[0]))

ax_expected.bar(1, expected[1], facecolor=expected_colormap(expected[1]))

# Probabilities plot details

ax_prior.set(xlim=[1, 0], yticks=[0, 1], yticklabels=['left', 'right'],

title="Prior p(s)", xticks=[])

ax_prior.yaxis.tick_right()

ax_prior.spines['left'].set_visible(False)

ax_prior.spines['bottom'].set_visible(False)

# Likelihood plot details

ax_likelihood.set(xticks=[0, 1], xticklabels=['fish', 'no fish'],

yticks=[0, 1], yticklabels=['left', 'right'],

ylabel='state (s)', xlabel='measurement (m)',

title='Likelihood p(m | s)')

ax_likelihood.xaxis.set_ticks_position('bottom')

ax_likelihood.spines['left'].set_visible(False)

ax_likelihood.spines['bottom'].set_visible(False)

# Posterior plot details

ax_posterior.set(xlim=[0, 1], xticks=[], yticks=[0, 1],

yticklabels=['left', 'right'],

title="Posterior p(s | m)")

ax_posterior.spines['left'].set_visible(False)

ax_posterior.spines['bottom'].set_visible(False)

# Utility plot details

ax_utility.set(xticks=[0, 1], xticklabels=['left', 'right'],

xlabel='action (a)', yticks=[0, 1],

yticklabels=['left', 'right'],

title='Utility', ylabel='state (s)')

ax_utility.xaxis.set_ticks_position('bottom')

ax_utility.spines['left'].set_visible(False)

ax_utility.spines['bottom'].set_visible(False)

# Expected Utility plot details

ax_expected.set(ylim=[-2, 2], xticks=[0, 1],

xticklabels=['left', 'right'],

xlabel='action (a)',

title='Expected utility', yticks=[])

ax_expected.spines['left'].set_visible(False)

# show values

ind = np.arange(2)

x,y = np.meshgrid(ind,ind)

for i in ind:

v = posterior[i]

c = f"{v:.2f}"

ax_posterior.text(v+0.2, i, c, va='center', ha='center', color='black')

for i,j in zip(x.flatten(), y.flatten()):

c = f"{likelihood[i,j]:.2f}"

ax_likelihood.text(j, i, c, va='center', ha='center', color='black')

for i,j in zip(x.flatten(), y.flatten()):

c = f"{utility[i, j]:.2f}"

ax_utility.text(j,i, c, va='center', ha='center', color='black')

for i in ind:

v = prior[i]

c = f"{v:.2f}"

ax_prior.text(v+0.2, i, c, va='center', ha='center', color='black')

for i in ind:

v = expected[i]

c = f"{v:.2f}"

ax_expected.text(i, v, c, va='center', ha='center', color='black')

plt.show()

return fig

Helper Functions#

Show code cell source

# @title Helper Functions

def compute_marginal(px, py, cor):

""" Calculate 2x2 joint probabilities given marginals p(x=1), p(y=1) and correlation

Args:

px (scalar): marginal probability of x

py (scalar): marginal probability of y

cor (scalar): correlation value

Returns:

ndarray of size (2, 2): joint probability array of x and y

"""

p11 = px*py + cor*np.sqrt(px*py*(1-px)*(1-py))

p01 = px - p11

p10 = py - p11

p00 = 1.0 - p11 - p01 - p10

return np.asarray([[p00, p01], [p10, p11]])

def compute_cor_range(px,py):

""" Calculate the allowed range of correlation values given marginals p(x=1)

and p(y=1)

Args:

px (scalar): marginal probability of x

py (scalar): marginal probability of y

Returns:

scalar, scalar: minimum and maximum possible values of correlation

"""

def p11(corr):

return px*py + corr*np.sqrt(px*py*(1-px)*(1-py))

def p01(corr):

return px - p11(corr)

def p10(corr):

return py - p11(corr)

def p00(corr):

return 1.0 - p11(corr) - p01(corr) - p10(corr)

Cmax = min(fsolve(p01, 0.0), fsolve(p10, 0.0))

Cmin = max(fsolve(p11, 0.0), fsolve(p00, 0.0))

return Cmin, Cmax

Section 0: Introduction#

Video 1: Introduction to Bayesian Statistics and Decisions#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Introduction_to_Bayesian_Statistics_and_Decisions_Video")

Section 1: Gone Fishin’#

Video 2: Gone Fishin’#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Gone_Fishin_Video")

This video covers the example problem of fishing that we will cover in this tutorial.

Click here for text recap of video

You were just introduced to the binary hidden state problem we are going to explore. You need to decide which side to fish on–the hidden state. We know fish like to school together. On different days the school of fish is either on the left or right side, but we don’t know what the case is today. We define our knowledge about the fish location as a distribution over the random hidden state variable. Using our probabilistic knowledge, also called our belief about the hidden state, we will explore how to make the decision about where to fish today, based on what to expect in terms of gains or losses for the decision. The gains and losss are defined by the utility of choosing an action, which is fishing on the left or right. The details of the utilities are described

In the next two sections we will consider just the probability of where the fish might be and what you gain or lose by choosing where to fish (leaving Bayesian approaches to the last few sections).

Remember, you can either think of yourself as a scientist conducting an experiment or as a brain trying to make a decision. The Bayesian approach is the same!

Section 2: Deciding where to fish#

Estimated timing to here from start of tutorial: 10 min

Video 3: Utility#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Utility_Video")

This video covers utility and expected utility.

Click here for text recap of video

You need to decide where to fish. It may seem obvious - you could just fish on the side where the probability of the fish being is higher! Unfortunately, decisions and actions are always a little more complicated. Deciding to fish may be influenced by more than just the probability of the school of fish being there as we saw by the potential issues of submarines and sunburn. The consequences of the action you take is based on the true (but hidden) state of the world and the action you choose! In our example, fishing on the wrong side, where there aren’t many fish, is likely to lead to you spending your afternoon not catching fish and therefore getting a sunburn. The submarine represents a risk to fishing on the right side that is greater than the left side. If you want to know what to expect from taking the action of fishing on one side or the other, you need to calculate the expected utility.

You know the (prior) probability that the school of fish is on the left side of the dock today, \(P(s = \textrm{left})\). So, you also know the probability the school is on the right, \(P(s = \textrm{right})\), because these two probabilities must add up to 1.

We quantify gains and losses numerically using a utility function \(U(s,a)\), which describes the consequences of your actions: how much value you gain (or if negative, lose) given the state of the world (\(s\)) and the action you take (\(a\)). In our example, our utility can be summarized as:

Utility: U(s,a) |

a = left |

a = right |

|---|---|---|

s = Left |

+2 |

-3 |

s = right |

-2 |

+1 |

To use possible gains and losses to choose an action, we calculate the expected utility of that action by weighing these utilities with the probability of that state occurring. This allows us to choose actions by taking probabilities of events into account: we don’t care if the outcome of an action-state pair is a loss if the probability of that state is very low. We can formalize this as:

In other words, the expected utility of an action a is the sum over possible states of the utility of that action and state times the probability of that state.

Interactive Demo 2: Exploring the decision#

Let’s start to get a sense of how all this works using the interactive demo below. You can change the probability that the school of fish is on the left side,\(p(s = \textrm{left})\), using the slider. You will see the utility function (a matrix) in the middle and the corresponding expected utility for each action on the right.

First, make sure you understand how the expected utility of each action is being computed from the probabilities and the utility values. In the initial state: the probability of the fish being on the left is 0.9 and on the right is 0.1. The expected utility of the action of fishing on the left is then \(U(s = \textrm{left},a = \textrm{left})p(s = \textrm{left}) + U(s = \textrm{right},a = \textrm{left})p(s = \textrm{right}) = 2(0.9) + -2(0.1) = 1.6\). Essentially, to get the expected utility of action \(a\), you are doing a weighted sum over the relevant column of the utility matrix (corresponding to action \(a\)) where the weights are the state probabilities.

For each of these scenarios, think and discuss first. Then use the demo to try out each and see if your action would have been correct (that is, if the expected value of that action is the highest).

You just arrived at the dock for the first time and have no sense of where the fish might be. So you guess that the probability of the school being on the left side is 0.5 (so the probability on the right side is also 0.5). Which side would you choose to fish on given our utility values?

You think that the probability of the school being on the left side is very low (0.1) and correspondingly high on the right side (0.9). Which side would you choose to fish on given our utility values?

What would you choose if the probability of the school being on the left side is slightly lower than on the right side (0. 4 vs 0.6)?

Execute this cell to use the widget

Show code cell source

# @markdown Execute this cell to use the widget

ps_widget = widgets.FloatSlider(0.9, description='p(s = left)',

min=0.0, max=1.0, step=0.01)

@widgets.interact(

ps = ps_widget,

)

def make_utility_plot(ps):

fig = plot_utility(ps)

plt.show(fig)

plt.close(fig)

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Exploring_the_decision_Interactive_Demo_and_Discussion")

Video 4: Utility Demo Discussion#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Utility_demo_discussion_Video")

In this section, you have seen that both the utility of various state and action pairs and our knowledge of the probability of each state affects your decision. Importantly, we want our knowledge of the probability of each state to be as accurate as possible!

So how do we know these probabilities? We may have prior knowledge from years of fishing at the same dock, learning that the fish are more likely to be on the left side, for example. Of course, we need to update our knowledge (our belief)! To do this, we need to collect more information, or take some measurements! In the next few sections, we will focus on how we improve our knowledge of the probabilities.

Section 3: Likelihood of the fish being on either side#

Estimated timing to here from start of tutorial: 25 min

Video 5: Likelihood#

Click here for text recap of video

First, we’ll think about what it means to take a measurement (also often called an observation or just data) and what it tells you about what the hidden state may be. Specifically, we’ll be looking at the likelihood, which is the probability of your measurement (\(m\)) given the hidden state (\(s\)): \(P(m | s)\). Remember that in this case, the hidden state is which side of the dock the school of fish is on. We will watch someone fish (for let’s say 10 minutes) and our measurement is whether they catch a fish or not. We know something about what catching a fish means for the likelihood of the fish being on one side or the other.

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Likelihood_Video")

Think! 3: Guessing the location of the fish#

Let’s say we go to a different dock to fish. Here, there are different probabilities of catching fish given the state of the world. At this dock, if you fish on the side of the dock where the fish are, you have a 70% chance of catching a fish. If you fish on the wrong side, you will catch a fish with only 20% probability. These are the likelihoods of observing someone catching a fish! That is, you are taking a measurement by seeing if someone else catches a fish!

You see a fisher-person is fishing on the left side.

Please figure out each of the following (might be easiest to do this separately and then compare notes):

probability of catching a fish given that the school of fish is on the left side, \(P(m = \textrm{catch fish} | s = \textrm{left} )\)

probability of not catching a fish given that the school of fish is on the left side, \(P(m = \textrm{no fish} | s = \textrm{left})\)

probability of catching a fish given that the school of fish is on the right side, \(P(m = \textrm{catch fish} | s = \textrm{right})\)

probability of not catching a fish given that the school of fish is on the right side, \(P(m = \textrm{no fish} | s = \textrm{right})\)

If the fisher-person catches a fish, which side would you guess the school is on? Why?

If the fisher-person does not catch a fish, which side would you guess the school is on? Why?

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Guessing_the_location_of_the_fish_Discussion")

In the prior exercise, you tried to guess where the school of fish was based on the measurement you took (watching someone fish). You did this by choosing the state (side where you think the fish are) that maximized the probability of the measurement. In other words, you estimated the state by maximizing the likelihood (the side with the highest probability of measurement given state \(P(m|s\))). This is called maximum likelihood estimation (MLE) and you’ve encountered it before during this course, in the pre-reqs statistics day and on Model Fitting day!

But, what if you had been going to this dock for years and you knew that the fish were almost always on the left side? This should probably affect how you make your estimate – you would rely less on the single new measurement and more on your prior knowledge. This is the fundamental idea behind Bayesian inference, as we will see later in this tutorial!

Section 4: Correlation and marginalization#

Estimated timing to here from start of tutorial: 35 min

Video 6: Correlation and marginalization#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Correlation_and_marginalization_Video")

Section 4.1: Correlation#

In this section, we are going to take a step back for a bit and think more generally about the amount of information shared between two random variables. We want to know how much information you gain when you observe one variable (take a measurement) if you know something about another. We will see that the fundamental concept is the same if we think about two attributes, for example the size and color of the fish, or the prior information and the likelihood.

Think! 4.1: Covarying probability distributions#

The relationship between the marginal probabilities and the joint probabilities is determined by the correlation between the two random variables - a normalized measure of how much the variables covary. We can also think of this as gaining some information about one of the variables when we observe a measurement from the other. We will think about this more formally in Tutorial 2.

Here, we want to think about how the correlation between size and color of these fish changes how much information we gain about one attribute based on the other. See Bonus Section 1 for the formula for correlation.

Use the widget below and answer the following questions:

When the correlation is zero, \(\rho = 0\), what does the distribution of size tell you about color?

Set \(\rho\) to something small. As you change the probability of golden fish, what happens to the ratio of size probabilities? Set \(\rho\) larger (can be negative). Can you explain the pattern of changes in the probabilities of size as you change the probability of golden fish?

Set the probability of golden fish and of large fish to around 65%. As the correlation goes towards 1, how often will you see silver large fish?

What is increasing the (absolute) correlation telling you about how likely you are to see one of the properties if you see a fish with the other?

Execute this cell to enable the widget

Show code cell source

# @markdown Execute this cell to enable the widget

style = {'description_width': 'initial'}

gs = GridspecLayout(2,2)

cor_widget = widgets.FloatSlider(0.0, description='ρ',

min=-1, max=1, step=0.01)

px_widget = widgets.FloatSlider(0.5, description='p(color=golden)',

min=0.01, max=0.99, step=0.01,

style=style)

py_widget = widgets.FloatSlider(0.5, description='p(size=large)',

min=0.01, max=0.99, step=0.01,

style=style)

gs[0, 0] = cor_widget

gs[0, 1] = px_widget

gs[1, 0] = py_widget

@widgets.interact(

px=px_widget,

py=py_widget,

cor=cor_widget,

)

def make_corr_plot(px, py, cor):

Cmin, Cmax = compute_cor_range(px, py) # allow correlation values

cor_widget.min, cor_widget.max = Cmin+0.01, Cmax-0.01

if cor_widget.value > Cmax:

cor_widget.value = Cmax

if cor_widget.value < Cmin:

cor_widget.value = Cmin

cor = cor_widget.value

P = compute_marginal(px,py,cor)

fig = plot_joint_probs(P)

plt.show(fig)

plt.close(fig)

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Covarying_probability_distributions_Discussion")

We have just seen how two random variables can be more or less independent. The more correlated, the less independent, and the more shared information. We also learned that we can marginalize to determine the marginal likelihood of a measurement or to find the marginal probability distribution of two random variables. We are going to now complete our journey towards being fully Bayesian!

Section 4.2: Marginalization#

Click here for text recap of relevant part of video

We may want to find the probability of one variable while ignoring another: we will do this by averaging out, or marginalizing, the irrelevant variable.

We will think of this in two different ways.

In the first math exercise, you will think about the case where you know the joint probabilities of two variables and want to figure out the probability of just one variable. To make this explicit, let’s assume that a fish has a color that is either gold or silver (our first variable) and a size that is either small or large (our second). We could write out the the joint probabilities: the probability of both specific attributes occurring together. For example, the probability of a fish being small and silver, \(P(X = \textrm{small}, Y = \textrm{silver})\), is 0.4. The following table summarizes our joint probabilities:

P(X, Y) |

Y = silver |

Y = gold |

|---|---|---|

X = small |

0.4 |

0.2 |

X = large |

0.1 |

0.3 |

We want to know what the probability of a fish being small regardless of color. Since the fish are either silver or gold, this would be the probability of a fish being small and silver plus the probability of a fish being small and gold. This is an example of marginalizing, or averaging out, the variable we are not interested in across the rows or columns.. In math speak: \(P(X = \textrm{small}) = \sum_y{P(X = \textrm{small}, Y)}\). This gives us a marginal probability, a probability of a variable outcome (in this case size), regardless of the other variables (in this case color).

More generally, we can marginalize out a second irrelevant variable \(y\) by summing over the relevant joint probabilities:

In the second math exercise, you will remove an unknown (the hidden state) to find the marginal probability of a measurement. You will do this by marginalizing out the hidden state. In this case, you know the conditional probabilities of the measurement given state and the probabilities of each state. You can marginalize using:

These two ways of thinking about marginalization (as averaging over joint probabilities or conditioning on some variable) are equivalent because the joint probability of two variables equals the conditional probability of the first given the second times the marginal probability of the second:

Math Exercise 4.2.1: Computing marginal probabilities#

To understand the information between two variables, let’s first consider the joint probabilities over the size and color of the fish.

P(X, Y) |

Y = silver |

Y = gold |

|---|---|---|

X = small |

0.4 |

0.2 |

X = large |

0.1 |

0.3 |

Please complete the following math problems to further practice thinking through probabilities:

Calculate the probability of a fish being silver.

Calculate the probability of a fish being small, large, silver, or gold.

Calculate the probability of a fish being small OR gold. Hint: \(P(A\ \textrm{or}\ B) = P(A) + P(B) - P(A\ \textrm{and}\ B)\).

We don’t typically have math exercises in NMA but feel it is important for you to really compute this out yourself. Feel free to use the next cell to write out the math if helpful, or use paper and pen. We recommend doing this exercise individually first and then comparing notes and discussing.

"""

Joint probabilities

P( X = small, Y = silver) = 0.4

P( X = large, Y = silver) = 0.1

P( X = small, Y = gold) = 0.2

P( X = large, Y = gold) = 0.3

1. P(Y = silver) = ...

2. P(X = small or large, Y = silver or gold) = ...

3. P( X = small or Y = gold) = ...

"""

'\nJoint probabilities\n\nP( X = small, Y = silver) = 0.4\nP( X = large, Y = silver) = 0.1\nP( X = small, Y = gold) = 0.2\nP( X = large, Y = gold) = 0.3\n\n\n1. P(Y = silver) = ...\n\n2. P(X = small or large, Y = silver or gold) = ...\n\n3. P( X = small or Y = gold) = ...\n\n'

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Computing_marginal_probabilities_Math_Exercise")

Math Exercise 4.2.2: Computing marginal likelihood#

When we normalize to find the posterior, we need to determine the marginal likelihood–or evidence–for the measurement we observed. To do this, we need to marginalize as we just did above to find the probabilities of a color or size. Only, in this case, we are marginalizing to remove a conditioning variable! In this case, let’s consider the likelihood of fish (if we observed a fisher-person fishing on the right).

p(m|s) |

m = fish |

m = no fish |

|---|---|---|

s = left |

0.1 |

0.9 |

s = right |

0.5 |

0.5 |

The table above shows us the likelihoods, just as we explored earlier.

You want to know the total probability of a fish being caught, \(P(m = \textrm{fish})\), by the fisher-person fishing on the right. (You would need this to calculate the posterior.) To do this, you will need to consider the prior probability, \(p(s)\), and marginalize over the hidden states!

This is an example of marginalizing, or conditioning away, the variable we are not interested in as well.

Please complete the following math problems to further practice thinking through probabilities:

Calculate the marginal likelihood of the fish being caught, \(P(m = \textrm{fish})\), if the priors are: \(p(s = \textrm{left}) = 0.3\) and \(p(s = \textrm{right}) = 0.7\).

Calculate the marginal likelihood of the fish being caught, \(P(m = \textrm{fish})\), if the priors are: \(p(s = \textrm{left}) = 0.6\) and \(p(s = \textrm{right}) = 0.4\).

We don’t typically have math exercises in NMA but feel it is important for you to really compute this out yourself. Feel free to use the next cell to write out the math if helpful, or use paper and pen. We recommend doing this exercise individually first and then comparing notes and discussing.

"""

Priors

P(s = left) = 0.3

P(s = right) = 0.7

Likelihoods

P(m = fish | s = left) = 0.1

P(m = fish | s = right) = 0.5

P(m = no fish | s = left) = 0.9

P(m = no fish | s = right) = 0.5

1. P(m = fish) = ...

2. P(m = fish) = ...

"""

'\n\nPriors\nP(s = left) = 0.3\nP(s = right) = 0.7\n\nLikelihoods\nP(m = fish | s = left) = 0.1\nP(m = fish | s = right) = 0.5\nP(m = no fish | s = left) = 0.9\nP(m = no fish | s = right) = 0.5\n\n1. P(m = fish) = ...\n\n2. P(m = fish) = ...\n\n'

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Computing_marginal_likelihood_Math_Exercise")

Section 5: Bayes’ Rule and the Posterior#

Estimated timing to here from start of tutorial: 55 min

Video 7: Posterior Beliefs#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Posterior_Beliefs_Video")

Marginalization is going to be used to combine our prior knowledge, which we call the prior, and our new information from a measurement, the likelihood. Only in this case, the information we gain about the hidden state we are interested in, where the fish are, is based on the relationship between the probabilities of the measurement and our prior.

We can now calculate the full posterior distribution for the hidden state (\(s\)) using Bayes’ Rule. As we’ve seen, the posterior is proportional to the prior times the likelihood. This means that the posterior probability of the hidden state (\(s\)) given a measurement (\(m\)) is proportional to the likelihood of the measurement given the state times the prior probability of that state:

We say proportional to instead of equal because we need to normalize to produce a full probability distribution:

Normalizing by this \(P(m)\) means that our posterior is a complete probability distribution that sums or integrates to 1 appropriately. We now can use this new, complete probability distribution for any future inference or decisions we like! In fact, as we will see tomorrow, we can use it as a new prior! Finally, we often call this probability distribution our beliefs over the hidden states, to emphasize that it is our subjective knowledge about the hidden state.

For many complicated cases, like those we might be using to model behavioral or brain inferences, the normalization term can be intractable or extremely complex to calculate. We can be careful to choose probability distributions whfere we can analytically calculate the posterior probability or numerical approximation is reliable. Better yet, we sometimes don’t need to bother with this normalization! The normalization term, \(P(m)\), is the probability of the measurement. This does not depend on state so is essentially a constant we can often ignore. We can compare the unnormalized posterior distribution values for different states because how they relate to each other is unchanged when divided by the same constant. We will see how to do this to compare evidence for different hypotheses tomorrow. (It’s also used to compare the likelihood of models fit using maximum likelihood estimation)

In this relatively simple example, we can compute the marginal likelihood \(P(m)\) easily by using:

We can then normalize so that we deal with the full posterior distribution.

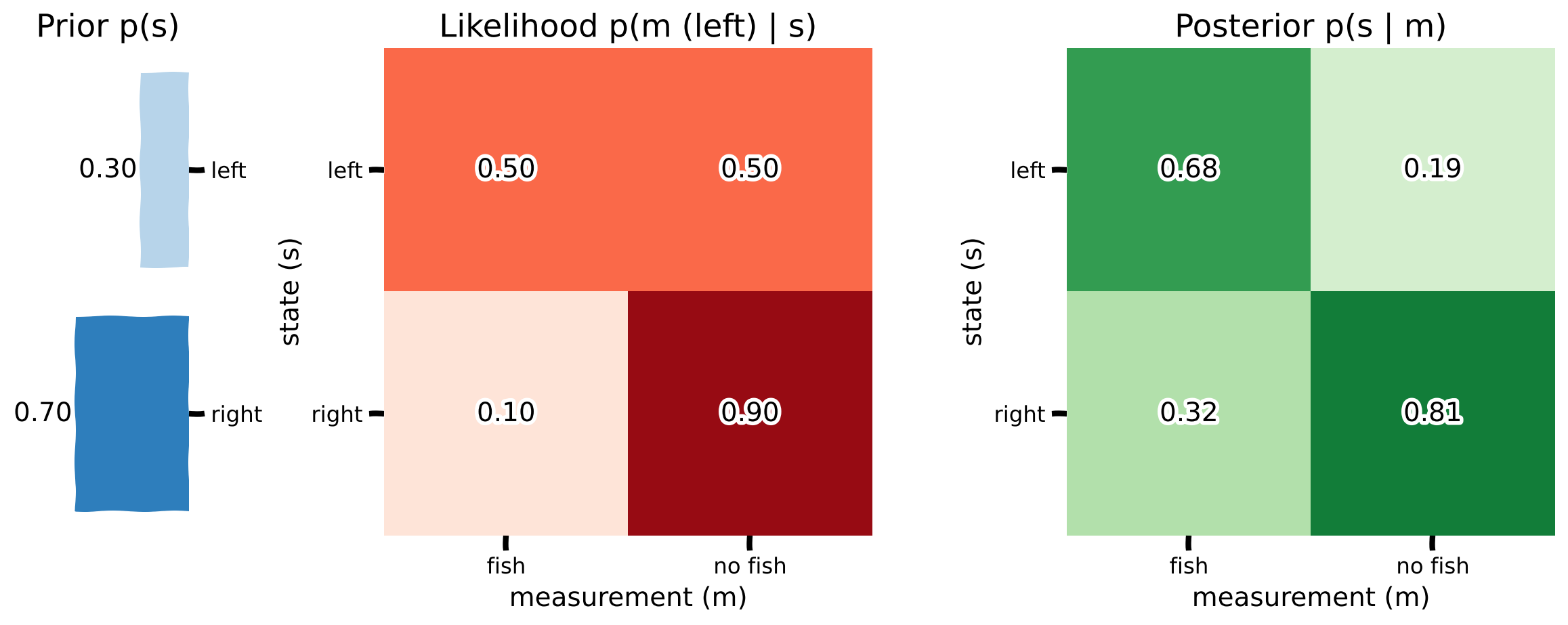

Math Exercise 5: Calculating a posterior probability#

Our prior is \(p(s = \textrm{left}) = 0.3\) and \(p(s = \textrm{right}) = 0.7\). In the video, we learned that the chance of catching a fish given they fish on the same side as the school was 50%. Otherwise, it was 10%. We observe a person fishing on the left side. Our likelihood is:

Likelihood: p(m | s) |

m = fish |

m = no fish |

|---|---|---|

s = left |

0.5 |

0.5 |

s = right |

0.1 |

0.9 |

Calculate the posterior probability (on paper) that:

The school is on the left if the fisher-person catches a fish: \(p(s = \textrm{left} | m = \textrm{fish})\) (hint: normalize by computing \(p(m = \textrm{fish})\))

The school is on the right if the fisher-person does not catch a fish: \(p(s = \textrm{right} | m = \textrm{no fish})\)

We don’t typically have math exercises in NMA but feel it is important for you to really compute this out yourself. Feel free to use the next cell to write out the math if helpful, or use paper and pen. We recommend doing this exercise individually first and then comparing notes and discussing.

"""

Priors

p(s = left) = 0.3

p(s = right) = 0.7

Likelihoods

P(m = fish | s = left) = 0.5

P(m = fish | s = right) = 0.1

P(m = no fish | s = left) = 0.5

P(m = no fish | s = right) = 0.9

1. p( s = left | m = fish) = ...

2. p( s = right | m = no fish ) = ...

"""

'\nPriors\np(s = left) = 0.3\np(s = right) = 0.7\n\nLikelihoods\nP(m = fish | s = left) = 0.5\nP(m = fish | s = right) = 0.1\nP(m = no fish | s = left) = 0.5\nP(m = no fish | s = right) = 0.9\n\n1. p( s = left | m = fish) = ...\n\n2. p( s = right | m = no fish ) = ...\n\n'

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Calculating_a_posterior_probability_Math_Exercise")

Coding Exercise 5: Computing Posteriors#

Let’s implement our above math to be able to compute posteriors for different priors and likelihoods.

As before, our prior is \(p(s = \textrm{left}) = 0.3\) and \(p(s = \textrm{right}) = 0.7\). In the video, we learned that the chance of catching a fish given they fish on the same side as the school was 50%. Otherwise, it was 10%. We observe a person fishing on the left side. Our likelihood is:

Likelihood: p(m | s) |

m = fish |

m = no fish |

|---|---|---|

s = left |

0.5 |

0.5 |

s = right |

0.1 |

0.9 |

We want our full posterior to take the same 2 by 2 form. Make sure the outputs match your math answers!

def compute_posterior(likelihood, prior):

""" Use Bayes' Rule to compute posterior from likelihood and prior

Args:

likelihood (ndarray): i x j array with likelihood probabilities where i is

number of state options, j is number of measurement options

prior (ndarray): i x 1 array with prior probability of each state

Returns:

ndarray: i x j array with posterior probabilities where i is

number of state options, j is number of measurement options

"""

#################################################

## TODO for students ##

# Fill out function and remove

raise NotImplementedError("Student exercise: implement compute_posterior")

#################################################

# Compute unnormalized posterior (likelihood times prior)

posterior = ... # first row is s = left, second row is s = right

# Compute p(m)

p_m = np.sum(posterior, axis = 0)

# Normalize posterior (divide elements by p_m)

posterior /= ...

return posterior

# Make prior

prior = np.array([0.3, 0.7]).reshape((2, 1)) # first row is s = left, second row is s = right

# Make likelihood

likelihood = np.array([[0.5, 0.5], [0.1, 0.9]]) # first row is s = left, second row is s = right

# Compute posterior

posterior = compute_posterior(likelihood, prior)

# Visualize

plot_prior_likelihood_posterior(prior, likelihood, posterior)

Example output:

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Computing_Posteriors_Exercise")

Interactive Demo 5: What affects the posterior?#

Now that we can understand the implementation of Bayes rule, let’s vary the parameters of the prior and likelihood to see how changing the prior and likelihood affect the posterior.

In the demo below, you can change the prior by playing with the slider for \(p( s = left)\). You can also change the likelihood by changing the probability of catching a fish given that the school is on the left and the probability of catching a fish given that the school is on the right. The fisher-person you are observing is fishing on the left.

Keeping the likelihood constant, when does the prior have the strongest influence over the posterior? Meaning, when does the posterior look most like the prior no matter whether a fish was caught or not?

What happens if the likelihoods for catching a fish are similar when you fish on the correct or incorrect side?

Set the prior probability of the state = left to 0.6 and play with the likelihood. When does the likelihood exert the most influence over the posterior?

Execute this cell to enable the widget

Show code cell source

# @markdown Execute this cell to enable the widget

# style = {'description_width': 'initial'}

ps_widget = widgets.FloatSlider(0.3, description='p(s = left)',

min=0.01, max=0.99, step=0.01)

p_a_s1_widget = widgets.FloatSlider(0.5,

description='p(fish on left | state = left)',

min=0.01, max=0.99, step=0.01, style=style,

layout=Layout(width='370px'))

p_a_s0_widget = widgets.FloatSlider(0.1,

description='p(fish on left | state = right)',

min=0.01, max=0.99, step=0.01, style=style,

layout=Layout(width='370px'))

observed_widget = ToggleButtons(options=['Fish', 'No Fish'],

description='Observation (m) on the left:', disabled=False, button_style='',

layout=Layout(width='auto', display="flex"),

style={'description_width': 'initial'}

)

widget_ui = VBox([ps_widget,

HBox([p_a_s1_widget, p_a_s0_widget]),

observed_widget])

widget_out = interactive_output(plot_prior_likelihood,

{'ps': ps_widget,

'p_a_s1': p_a_s1_widget,

'p_a_s0': p_a_s0_widget,

'measurement': observed_widget})

display(widget_ui, widget_out)

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_What_affects_the_posterior_Interactive_Demo_and_Discussion")

Video 8: Posterior Beliefs Exercises Discussion#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Posterior_Beliefs_Exercises_Discussion_Video")

Section 6: Making Bayesian fishing decisions#

Estimated timing to here from start of tutorial: 1 hr, 15 min

Video 9: Bayesian Decisions#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Bayesian_Decisions_Video")

We will explore how to consider the expected utility of an action based on our belief (the posterior distribution) about where we think the fish are. Now we have all the components of a Bayesian decision: our prior information, the likelihood given a measurement, the posterior distribution (belief) and our utility (the gains and losses). This allows us to consider the relationship between the true value of the hidden state, \(s\), and what we expect to get if we take action, \(a\), based on our belief!

Let’s use the following widget to think about the relationship between these probability distributions and utility function.

Interactive Demo! 6: What is more important, the probabilities or the utilities?#

We are now going to put everything we’ve learned together to gain some intuitions for how each of the elements that goes into a Bayesian decision comes together. Remember, the common assumption in neuroscience, psychology, economics, ecology, etc. is that we (humans and animals) are trying to maximize our expected utility. There is a lot going on in this demo as it brings everything in this tutorial together in one place - please spend time making sure you understand the controls and the plots, especially how everything relates together.

Can you find a situation where the expected utility is the same for both actions?

What is more important for determining the expected utility: the prior or a new measurement (the likelihood)?

Why is this a normative model?

Can you think of ways in which this model would need to be extended to describe human or animal behavior?

Execute this cell to enable the widget

Show code cell source

# @markdown Execute this cell to enable the widget

# style = {'description_width': 'initial'}

ps_widget = widgets.FloatSlider(0.3, description='p(s = left)',

min=0.01, max=0.99, step=0.01,

layout=Layout(width='300px'))

p_a_s1_widget = widgets.FloatSlider(0.5,

description='p(fish on left | state = left)',

min=0.01, max=0.99, step=0.01,

style=style, layout=Layout(width='370px'))

p_a_s0_widget = widgets.FloatSlider(0.1,

description='p(fish on left | state = right)',

min=0.01, max=0.99, step=0.01,

style=style, layout=Layout(width='370px'))

observed_widget = ToggleButtons(options=['Fish', 'No Fish'],

description='Observation (m) on the left:', disabled=False, button_style='',

layout=Layout(width='auto', display="flex"),

style={'description_width': 'initial'}

)

widget_ui = VBox([ps_widget,

HBox([p_a_s1_widget, p_a_s0_widget]),

observed_widget])

widget_out = interactive_output(plot_prior_likelihood_utility,

{'ps': ps_widget,

'p_a_s1': p_a_s1_widget,

'p_a_s0': p_a_s0_widget,

'measurement': observed_widget})

display(widget_ui, widget_out)

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Probabilities_vs_utilities_Interactive_Demo_and_Discussion")

Video 10: Bayesian Decisions Demo Discussion#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Bayesian_Decisions_Demo_Discussion_Video")

Summary#

Estimated timing of tutorial: 1 hour, 30 minutes

In this tutorial, you learned about combining prior information with new measurements to update your knowledge using Bayes Rule, in the context of a fishing problem.

Specifically, we covered:

That the likelihood is the probability of the measurement given some hidden state

That how the prior and likelihood interact to create the posterior, the probability of the hidden state given a measurement, depends on how they covary

That utility is the gain from each action and state pair, and the expected utility for an action is the sum of the utility for all state pairs, weighted by the probability of that state happening. You can then choose the action with the highest expected utility.

Bonus#

Bonus Section 1: Correlation Formula#

To understand the way we calculate the correlation, we need to review the definition of covariance and correlation.

Covariance:

Correlation: