Tutorial 1: Geometric view of data#

Week 1, Day 4: Dimensionality Reduction

By Neuromatch Academy

Content creators: Alex Cayco Gajic, John Murray

Content reviewers: Roozbeh Farhoudi, Matt Krause, Spiros Chavlis, Richard Gao, Michael Waskom, Siddharth Suresh, Natalie Schaworonkow, Ella Batty

Production editors: Gagana B, Spiros Chavlis

Tutorial Objectives#

Estimated timing of tutorial: 50 minutes

In this notebook we’ll explore how multivariate data can be represented in different orthonormal bases. This will help us build intuition that will be helpful in understanding PCA in the following tutorial.

Overview:

Generate correlated multivariate data.

Define an arbitrary orthonormal basis.

Project the data onto the new basis.

Setup#

Install and import feedback gadget#

Show code cell source

# @title Install and import feedback gadget

!pip3 install vibecheck datatops --quiet

from vibecheck import DatatopsContentReviewContainer

def content_review(notebook_section: str):

return DatatopsContentReviewContainer(

"", # No text prompt

notebook_section,

{

"url": "https://pmyvdlilci.execute-api.us-east-1.amazonaws.com/klab",

"name": "neuromatch_cn",

"user_key": "y1x3mpx5",

},

).render()

feedback_prefix = "W1D4_T1"

# Imports

import numpy as np

import matplotlib.pyplot as plt

Figure Settings#

Show code cell source

# @title Figure Settings

import logging

logging.getLogger('matplotlib.font_manager').disabled = True

import ipywidgets as widgets # interactive display

%config InlineBackend.figure_format = 'retina'

plt.style.use("https://raw.githubusercontent.com/NeuromatchAcademy/course-content/main/nma.mplstyle")

Plotting Functions#

Show code cell source

# @title Plotting Functions

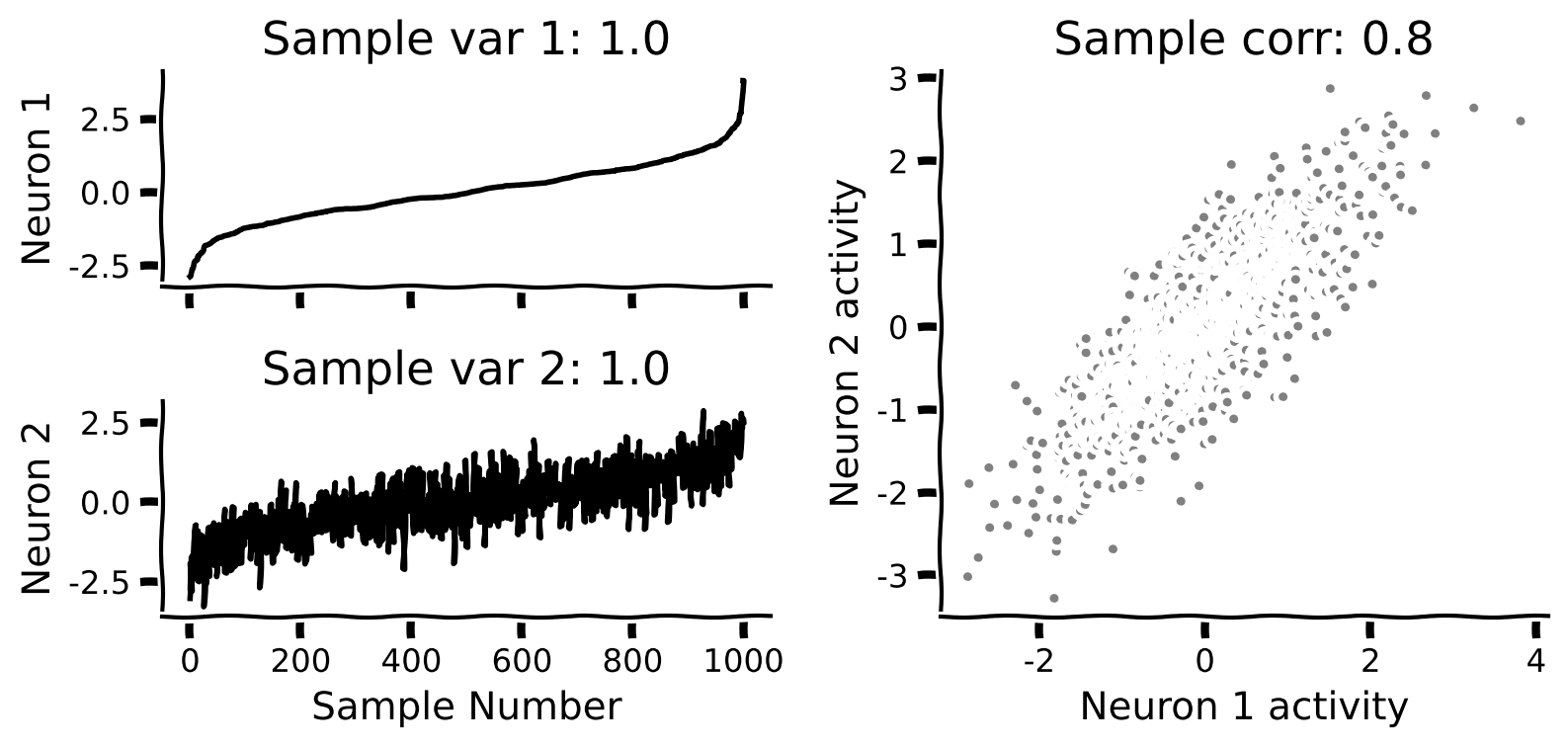

def plot_data(X):

"""

Plots bivariate data. Includes a plot of each random variable, and a scatter

plot of their joint activity. The title indicates the sample correlation

calculated from the data.

Args:

X (numpy array of floats) : Data matrix each column corresponds to a

different random variable

Returns:

Nothing.

"""

fig = plt.figure(figsize=[8, 4])

gs = fig.add_gridspec(2, 2)

ax1 = fig.add_subplot(gs[0, 0])

ax1.plot(X[:, 0], color='k')

plt.ylabel('Neuron 1')

plt.title(f'Sample var 1: {np.var(X[:, 0]):.1f}')

ax1.set_xticklabels([])

ax2 = fig.add_subplot(gs[1, 0])

ax2.plot(X[:, 1], color='k')

plt.xlabel('Sample Number')

plt.ylabel('Neuron 2')

plt.title(f'Sample var 2: {np.var(X[:, 1]):.1f}')

ax3 = fig.add_subplot(gs[:, 1])

ax3.plot(X[:, 0], X[:, 1], '.', markerfacecolor=[.5, .5, .5],

markeredgewidth=0)

ax3.axis('equal')

plt.xlabel('Neuron 1 activity')

plt.ylabel('Neuron 2 activity')

plt.title(f'Sample corr: {np.corrcoef(X[:, 0], X[:, 1])[0, 1]:.1f}')

plt.show()

def plot_basis_vectors(X, W):

"""

Plots bivariate data as well as new basis vectors.

Args:

X (numpy array of floats) : Data matrix each column corresponds to a

different random variable

W (numpy array of floats) : Square matrix representing new orthonormal

basis each column represents a basis vector

Returns:

Nothing.

"""

plt.figure(figsize=[4, 4])

plt.plot(X[:, 0], X[:, 1], '.', color=[.5, .5, .5], label='Data')

plt.axis('equal')

plt.xlabel('Neuron 1 activity')

plt.ylabel('Neuron 2 activity')

plt.plot([0, W[0, 0]], [0, W[1, 0]], color='r', linewidth=3,

label='Basis vector 1')

plt.plot([0, W[0, 1]], [0, W[1, 1]], color='b', linewidth=3,

label='Basis vector 2')

plt.legend()

plt.show()

def plot_data_new_basis(Y):

"""

Plots bivariate data after transformation to new bases.

Similar to plot_data but with colors corresponding to projections onto

basis 1 (red) and basis 2 (blue). The title indicates the sample correlation

calculated from the data.

Note that samples are re-sorted in ascending order for the first

random variable.

Args:

Y (numpy array of floats) : Data matrix in new basis each column

corresponds to a different random variable

Returns:

Nothing.

"""

fig = plt.figure(figsize=[8, 4])

gs = fig.add_gridspec(2, 2)

ax1 = fig.add_subplot(gs[0, 0])

ax1.plot(Y[:, 0], 'r')

plt.xlabel

plt.ylabel('Projection \n basis vector 1')

plt.title(f'Sample var 1: {np.var(Y[:, 0]):.1f}')

ax1.set_xticklabels([])

ax2 = fig.add_subplot(gs[1, 0])

ax2.plot(Y[:, 1], 'b')

plt.xlabel('Sample number')

plt.ylabel('Projection \n basis vector 2')

plt.title(f'Sample var 2: {np.var(Y[:, 1]):.1f}')

ax3 = fig.add_subplot(gs[:, 1])

ax3.plot(Y[:, 0], Y[:, 1], '.', color=[.5, .5, .5])

ax3.axis('equal')

plt.xlabel('Projection basis vector 1')

plt.ylabel('Projection basis vector 2')

plt.title(f'Sample corr: {np.corrcoef(Y[:, 0], Y[:, 1])[0, 1]:.1f}')

plt.show()

Video 1: Geometric view of data#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Geometric_view_of_data_Video")

Section 2: Define a new orthonormal basis#

Estimated timing to here from start of tutorial: 20 min

Video 3: Orthonormal bases#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Orthonormal_Bases_Video")

This video shows that data can be represented in many ways using different bases. It also explains how to check if your favorite basis is orthonormal.

Click here for text recap of video

Next, we will define a new orthonormal basis of vectors \({\bf u} = [u_1,u_2]\) and \({\bf w} = [w_1,w_2]\). As we learned in the video, two vectors are orthonormal if:

They are orthogonal (i.e., their dot product is zero):

They have unit length:

In two dimensions, it is easy to make an arbitrary orthonormal basis. All we need is a random vector \({\bf u}\), which we have normalized. If we now define the second basis vector to be \({\bf w} = [-u_2,u_1]\), we can check that both conditions are satisfied:

and

where we used the fact that \({\bf u}\) is normalized. So, with an arbitrary input vector, we can define an orthonormal basis, which we will write in matrix by stacking the basis vectors horizontally:

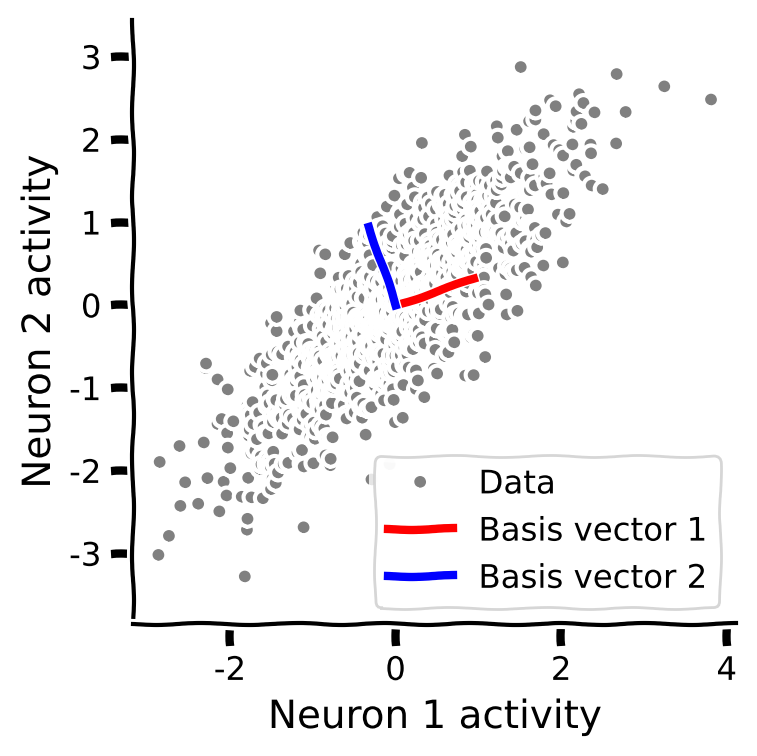

Coding Exercise 2: Find an orthonormal basis#

In this exercise you will fill in the function below to define an orthonormal basis, given a single arbitrary 2-dimensional vector as an input.

Steps

Modify the function

define_orthonormal_basisto first normalize the first basis vector \(\bf u\).Then complete the function by finding a basis vector \(\bf w\) that is orthogonal to \(\bf u\).

Test the function using initial basis vector \({\bf u} = [3,1]\). Plot the resulting basis vectors on top of the data scatter plot using the function

plot_basis_vectors. (For the data, use \(\sigma_1^2 =1\), \(\sigma_2^2 =1\), and \(\rho = .8\)).

def define_orthonormal_basis(u):

"""

Calculates an orthonormal basis given an arbitrary vector u.

Args:

u (numpy array of floats) : arbitrary 2-dimensional vector used for new

basis

Returns:

(numpy array of floats) : new orthonormal basis

columns correspond to basis vectors

"""

#################################################

## TODO for students: calculate the orthonormal basis

# Fill out function and remove

raise NotImplementedError("Student exercise: implement the orthonormal basis function")

#################################################

# Normalize vector u

u = ...

# Calculate vector w that is orthogonal to u

w = ...

# Put in matrix form

W = np.column_stack([u, w])

return W

# Set up parameters

np.random.seed(2020) # set random seed

variance_1 = 1

variance_2 = 1

corr_coef = 0.8

u = np.array([3, 1])

# Compute covariance matrix

cov_matrix = calculate_cov_matrix(variance_1, variance_2, corr_coef)

# Generate data

X = get_data(cov_matrix)

# Get orthonomal basis

W = define_orthonormal_basis(u)

# Visualize

plot_basis_vectors(X, W)

Example output:

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Find_an_orthonormal_basis_Exercise")

Section 3: Project data onto new basis#

Estimated timing to here from start of tutorial: 35 min

Video 4: Change of basis#

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Change_of_basis_Video")

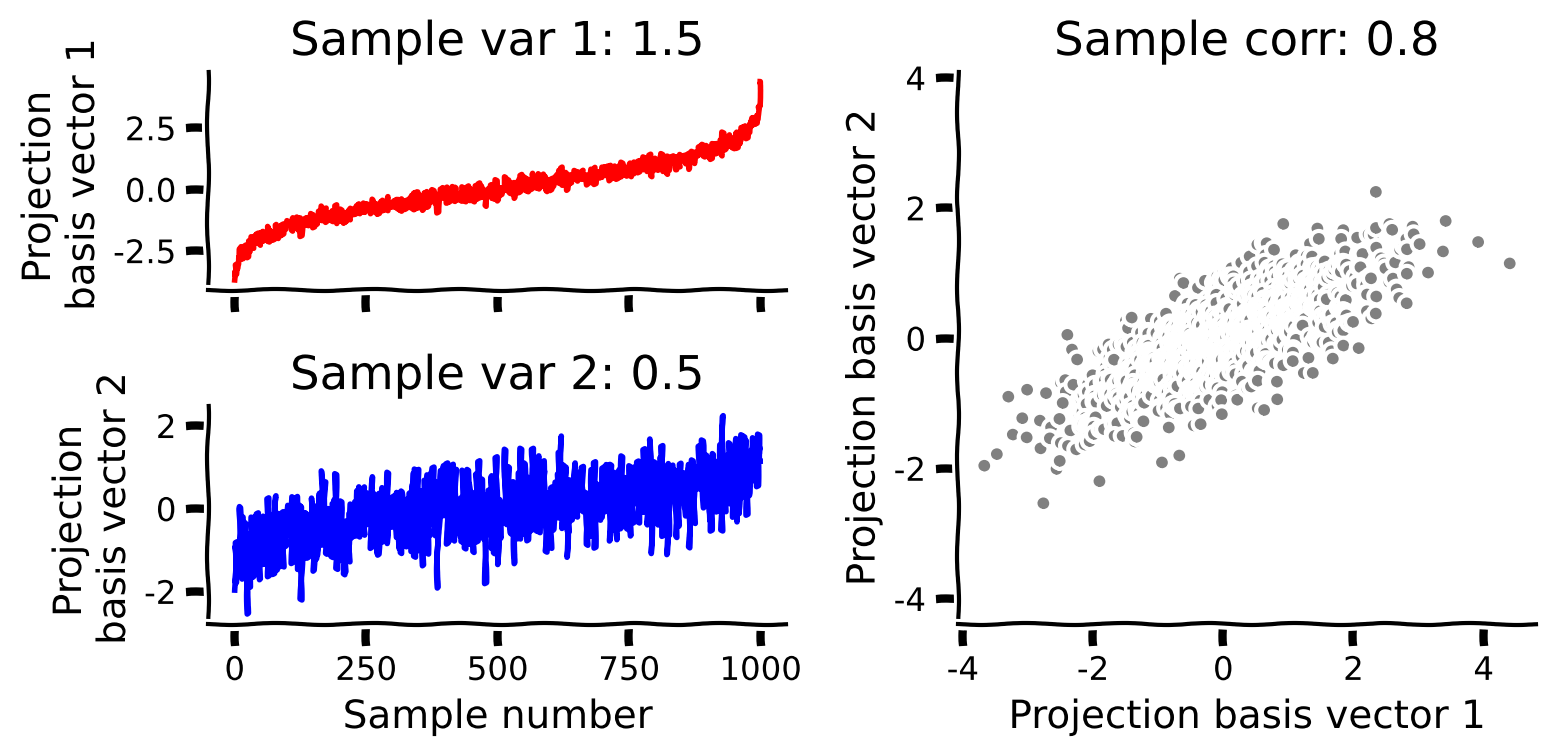

Finally, we will express our data in the new basis that we have just found. Since \(\bf W\) is orthonormal, we can project the data into our new basis using simple matrix multiplication :

We will explore the geometry of the transformed data \(\bf Y\) as we vary the choice of basis.

Coding Exercise 3: Change to orthonormal basis#

In this exercise you will fill in the function below to change data to an orthonormal basis.

Steps

Complete the function

change_of_basisto project the data onto the new basis.Plot the projected data using the function

plot_data_new_basis.What happens to the correlation coefficient in the new basis? Does it increase or decrease?

What happens to variance?

def change_of_basis(X, W):

"""

Projects data onto new basis W.

Args:

X (numpy array of floats) : Data matrix each column corresponding to a

different random variable

W (numpy array of floats) : new orthonormal basis columns correspond to

basis vectors

Returns:

(numpy array of floats) : Data matrix expressed in new basis

"""

#################################################

## TODO for students: project the data onto a new basis W

# Fill out function and remove

raise NotImplementedError("Student exercise: implement change of basis")

#################################################

# Project data onto new basis described by W

Y = ...

return Y

# Project data to new basis

Y = change_of_basis(X, W)

# Visualize

plot_data_new_basis(Y)

Example output:

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Change_to_orthonormal_basis_Exercise")

Interactive Demo 3: Play with the basis vectors#

To see what happens to the correlation as we change the basis vectors, run the cell below. The parameter \(\theta\) controls the angle of \(\bf u\) in degrees. Use the slider to rotate the basis vectors.

What happens to the projected data as you rotate the basis?

How does the correlation coefficient change? How does the variance of the projection onto each basis vector change?

Are you able to find a basis in which the projected data is uncorrelated?

Make sure you execute this cell to enable the widget!

Show code cell source

# @markdown Make sure you execute this cell to enable the widget!

def refresh(theta=0):

u = np.array([1, np.tan(theta * np.pi / 180)])

W = define_orthonormal_basis(u)

Y = change_of_basis(X, W)

plot_basis_vectors(X, W)

plot_data_new_basis(Y)

_ = widgets.interact(refresh, theta=(0, 90, 5))

Submit your feedback#

Show code cell source

# @title Submit your feedback

content_review(f"{feedback_prefix}_Play_with_basis_vectors_Interactive_Demo_and_Discussion")

Summary#

Estimated timing of tutorial: 50 minutes

In this tutorial, we learned that multivariate data can be visualized as a cloud of points in a high-dimensional vector space. The geometry of this cloud is shaped by the covariance matrix.

Multivariate data can be represented in a new orthonormal basis using the dot product. These new basis vectors correspond to specific mixtures of the original variables - for example, in neuroscience, they could represent different ratios of activation across a population of neurons.

The projected data (after transforming into the new basis) will generally have a different geometry from the original data. In particular, taking basis vectors that are aligned with the spread of cloud of points decorrelates the data.

These concepts - covariance, projections, and orthonormal bases - are key for understanding PCA, which will be our focus in the next tutorial.